The Open Source Observability Landscape

27 minute read

Storing and Accessing Telemetry

Are you feeling overwhelmed by the huge explosion of choices for observability and monitoring in open source? How you are expected to develop and deploy applications is changing rapidly — from monoliths to microservices and more, complexity keeps growing. Luckily, however, there’s been a renaissance in open source innovations as projects including ELK, Prometheus, and Jaeger grow in popularity and usage. You might have even heard of service meshes like Istio that can generate telemetry automatically. How do you make sense of all these tools and how do they stack up when it comes to solving problems quickly? This guide is written to help you make sense of the most popular open source methods of storing and accessing telemetry, and how they relate to Honeycomb. In this paper, we’ll cover the storage systems for persisting and accessing observability data — there are also open source projects such as OpenTelemetry that define a format for exporting data to these storage systems, but we’ll save discussing those for another day.

Intro

It used to be a simpler time — you had fewer services, often deployed to only one powerful server, that you were responsible for. Most of the things that could go wrong with these monoliths were well understood. You logged slow database queries as they happened and added MySQL indexes. You spotted network issues easily with high level metrics. You monitored disk space and memory usage remedied shortages when needed. When something went wrong, the path to finding out what happened was straightforward — likely you would access a shell on a server, read the logs, deduce what was happening and act accordingly to fix it.

Eventually, however, the Internet became a victim of its own success — traffic had to be served to more people, teams had to scale out their development, and 1 novel challenges began to brew. Given Linux’s critical role in enabling large scale web applications in the first place, it’s no surprise that the open source heritage of the infrastructure carried forward to new troubleshooting tools. Programmers solving their own problems began to build out the next generation of tooling to solve these complicated new issues and you stand to benefit by leveraging their work and experience.

General Costs and Benefits of OSS

While we will get into more specific details about projects further along in this guide, it’s worth reflecting on the general cost/benefit analysis for using open source tooling. One big advantage of open source tools is obvious — the sticker price is $0, and anyone can freely download and start tinkering with the software for their own needs immediately. There’s also a lot of appeal to the open source aspect in terms of addressing your own needs. If something goes wrong when running the software, you can go dive into the code and debug it. Likewise, if you have a particular need for a feature, no one is stopping you from developing it and working to get it contributed upstream. Open source politics aside, this is frequently a powerful model, and especially for those for whom ideological purity is important, the use of software where you have full unfettered access to the source code is attractive.

As is the case with most things in life, there’s a counterpoint to every advantage listed above. While it’s zero cost to start using the software, resources need to be invested in to maintaining it, and especially for observability-scale infrastructure, some of the underlying data stores can get pretty operationally hairy. That uses up valuable person-cycles that should be available for developing new features and keeping the apps themselves running smoothly. Documentation and support on such projects is frequently patchy and on a best-effort basis – 2 overworked and frustrated open source maintainers won’t take your requests as seriously as a business whose well-being depends on it.

We at Honeycomb are a vendor, and we encourage you to pay for software when it suits your needs. A vendor can often be your best friend by freeing you up to shave fewer yaks and do more of what you really want to do – deliver more software. That said, we think open source and vendors coexist quite well – for instance Prometheus, with its relative ease of operation and solid handling of metrics data, makes a natural complement to buyers and users of Honeycomb.

The Tools

We’ll go through a few examples of open source tools and describe what they are, how data is ingested into them, and a “Honeycomb Hot Take” on how their strengths and weaknesses compare to Honeycomb. In general, these tools fall into one of three buckets – logs, metrics, and tracing tools. Some folks have called these the three pillars of observability, but we don’t particularly agree with that characterization — instead, we think there’s a lot more potential in the observability movement than what these tools alone have to offer. Some of the outlined issues with the tools we’ll discuss might help illuminate why.

The tools we will go over are:

- Prometheus – A time series database for metrics

- Elasticsearch/Logstash/Kibana – commonly called “ELK” for short – A log storage, processing, and querying stack

- Jaeger – A system for distributed tracing

There are other, similar tools, to some of these, which we will note in section – but these hit the primary points of what’s available in the “open source market” today.

Prometheus: what is it?

Prometheus is a time series database for metrics monitoring. Its popularity has grown alongside the groundswell of popularity around open source container deployment methods such as Docker and Kubernetes. In metrics, you store a numeric time series representing what is happening in your system. These time series can be counters, which have a value that resets at each time bucket, or gauges, which are a cumulative number that goes up or down over time. An example of a counter would be the number of HTTP requests seen — every time a new request comes in, you increment the counter. A gauge, on the other hand, would be something like the number of running Kubernetes pods – that will go up and down over time, and it represents a cumulative number. Prometheus also has support for two more sophisticated types, histograms and summaries, which allow you to analyze more sophisticated distributions of data.

What would attract you to Prometheus? Its vibrant open source community, wide variety of integrations to get data in, and a more mature data model than traditional metrics systems like Graphite are all big selling points. In Prometheus, you can give each metric labels, which group metrics into segments of interest. For instance, a metric tracking HTTP request counts can be split according to status code, allowing us to know the number of HTTP 5XX errors. This is a big leap forward from Graphite, where metrics didn’t have any notion of such metadata, and segmenting had to be done using kludgey solutions such as giving each metric a unique name ( app.http.status.500, for instance).

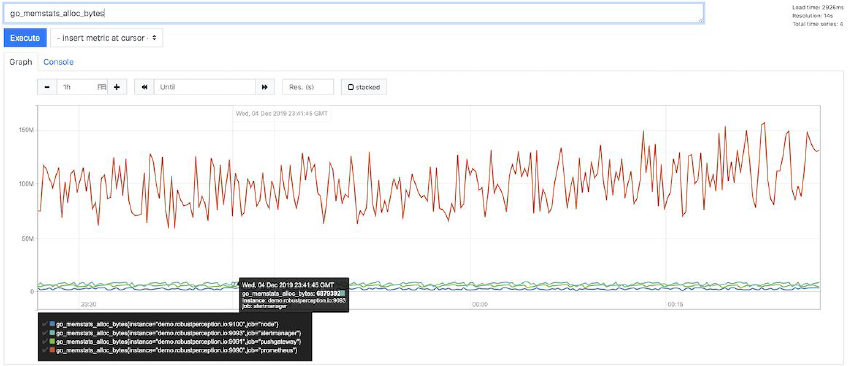

Prometheus also supports a dynamic query language, PromQL, which enables you to explore the underlying data in a rich and flexible manner. Prometheus is 4 often paired with a dashboarding tool such as Grafana, which provides you an attractive and usable frontend for the underlying time series data. Prometheus also has a default UI for quick querying with PromQL directly.

Getting Data In

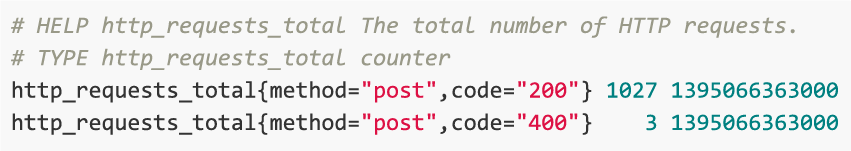

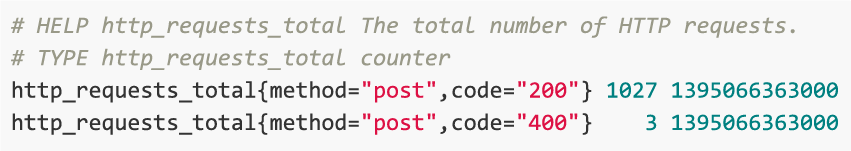

Prometheus is primarily based on a polling/scraping system, which will issue simple HTTP requests to targets who expose data in a plain text format. The format looks like this.

Apps that publish Prometheus metrics therefore serve this format on an endpoint such as /app/metrics. Using Push Gateway, processes that are not long-lived (such as background jobs) can also push metrics to Prometheus. Getting data into Prometheus, then, entails that you export this text format somehow. In the case of host level metrics, you can install the Node Exporter on every host to get some solid out-of-the-box scrape pages for things like CPU, memory, and disk usage.

The most valuable metrics such as error rates are exported from apps themselves in code that relies on Prometheus client bindings. For instance, to use a counter, you would update your code to have Prometheus-specific code like the following example.