AI Strategies for Software Engineering Career Growth

All the conditions necessary to alter the career paths of brand new software engineers coalesced—extreme layoffs and hiring freezes in tech danced with the irreversible introduction of ChatGPT and GitHub Copilot. Recession and AI-assisted programming signaled the potential end of a dream to bootcamp-educated juniors.

By: Ruthie Irvin

8 Best Practices to Understand and Build Generative AI Applications Effectively

Learn MoreSpace.com sums up the Big Bang as our universe starting “with an infinitely hot and dense single point that inflated and stretched—first at unimaginable speeds, and then at a more measurable rate […] to the still-expanding cosmos that we know today,” and that’s kind of how I like to think about November 2022 for junior developers. All the conditions necessary to alter the career paths of brand new software engineers coalesced—extreme layoffs and hiring freezes in tech danced with the irreversible introduction of ChatGPT and GitHub Copilot. Recession and AI-assisted programming signaled the potential end of a dream to bootcamp-educated juniors.

With internships secured for every member of my cohort at a 6-month nonprofit full-stack bootcamp, I looked forward to continuing my education through a paid placement at one of the sponsor companies. Shortly before the interviews and matching process commenced, concerning announcements filtered in that some of the sponsors stopped responding to communications. Getting ghosted by those companies meant that not everyone had a placement after all.

A shift in programming

Without delay, programming shifted to interview prep and studying LLMs as a means of proving that we were just as cutting edge as the next candidate. The months we’d just spent learning how to Create-React-App, writing JOINs with MySQL, and deploying an app on Render with Auth0 integration simply didn’t guarantee a job like it would have six months prior. Asking the Google gods or Stack Overflow archives the rightquestion took a backseat to creatively prompting LLMs to solve our problems faster. AI did in moments what sometimes took us days, and while it can’t replace learning how things work under the hood on the path to effective engineering, progressing as a junior developer requires harnessing its power to some degree.

Despite a hiring freeze, Honeycomb kept their commitment to the following cohort and took on two interns—including me—in the summer of 2023. Since the company provides a license for Copilot and has a clear AI usage policy, I have the privilege of utilizing AI tools to grow as a software engineer—including the luxury of learning how to get out of the traps set by its overconfidence.

Some AI best practices for engineers

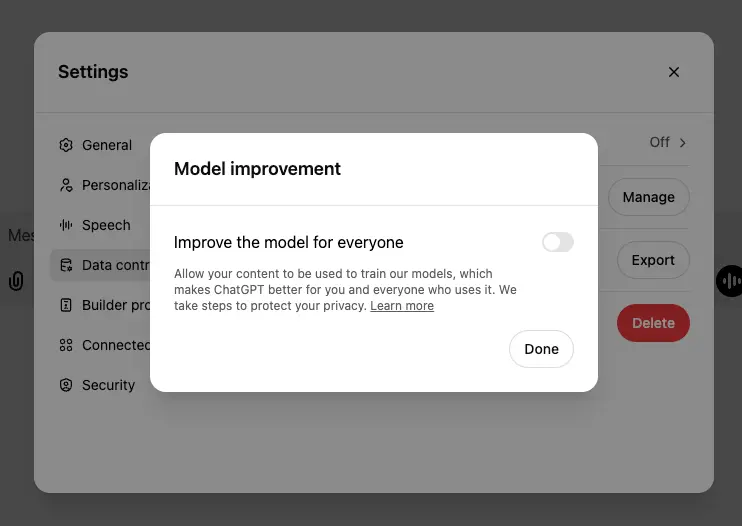

I found that in order to maintain good hygiene in software development, there are other best practices for AI tools besides respecting the company’s policies for use (i.e., toggling settings so the bot won’t learn from our convos at Honeycomb).

Autocomplete

Using Copilot’s autocomplete during pair programming saves so much time, and having that second set of eyes makes evaluating the accuracy of its guess much faster. Those precious minutes saved always expedite working on the more interesting problems at hand.

Treat AI like a pair programming partner

To avoid the trap of becoming a “lazy programmer,” when working alone, I treat AI like a pair programming partner by asking highly thoughtful and specific questions and doing some of the setup work manually (to avoid forgetting the basics and growing lazy).

Recently, while building a toggle component, I needed to write a simple yet comprehensive suite of tests according to the guidelines of our design system. Copilot would have had no problem spitting them out in seconds, but I haven’t had many opportunities to write tests from scratch since bootcamp, and I didn’t want to pass up a good learning opportunity to save a few minutes.

Since I can add whole files to the Copilot chat interface, I attached the component and CSS module files and then fed in this prompt:

You’re building this toggle component for your company’s design system. You want to make sure that the tests cover functionality as well as accessibility standards, and that the classNames are getting applied correctly. Without writing the code, what is a list of tests you’d write to assure full coverage of this component?

I took that list and asked the real people on my team if they agreed with the suggestions. Once they confirmed, I manually wrote the tests until they passed. I verified the tests actually tested what I wanted by breaking them on purpose—AI doesn’t QA its snippets like that. It can’t confirm that it’s testing exactly what the prompter wants (despite its presumptive certainty) because it’s guessing, so using its responses as a guidance tool rather than a generator tends to make for the best results.

To be clear, there are plenty of times that I use Copilot and ChatGPT to generate boilerplate code or do a first pass at something I don’t yet grasp. Like watching a more experienced programmer work in a pairing session, seeing how far I can understand the generated code and then asking follow up questions bolsters my learning every time. But, whether or not a code block works, the moment I paste it into the editor it’s my job to make sure I understand and trust every line before I put a PR in review.

A recent example

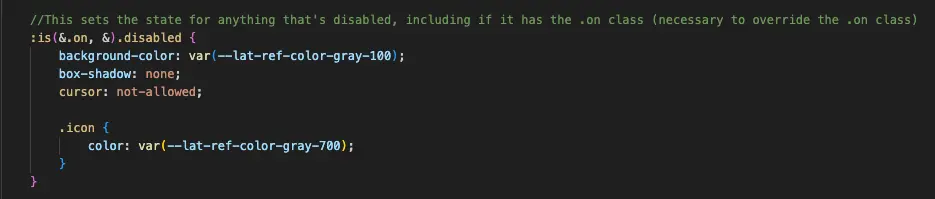

One of my favorite recent examples of how I use the bot day-to-day came when I paired with another engineer on the CSS module of the same toggle project. I had used AI to resolve the specificity issues I was facing on a particularly difficult variant, but the result was CSS nested four layers deep, and I recognized the code smell immediately. We were stumbling through refactoring some lines to eliminate repetition while also honoring specificity, and I fed the block to Copilot for a suggestion while my colleague searched the web for some ideas.

The problem I’d made for myself with AI was then resolved by debugging with AI. Since neither of us were familiar with the :is() pseudo-class, we studied the generated code and learned about it, then ultimately adopted that solution.

Aside from generating code with the help of AI, software engineers can lean on these tools for help communicating about their careers. A good self-assessment during review season or well-crafted resume does more than recount job duties in a bulleted list—it communicates the overall impact that work had on the organization. A simple prompt like, “Use the attachments to write a list of impact statements about these work outcomes that would stand out to a recruiter” submitted along with the requirements from a job listing and a list of recent work/outcomes could offer great shape to the developer’s story. Editing those statements matters because recruiters can absolutely tell when ChatGPT did the work, but save some sweat on the first draft and never underestimate the power of polished self-presentation.

Imposter syndrome

Unlike a bootcamper, AI doesn’t suffer from imposter syndrome in the face of an inhospitable job market. Its responses instead tend toward a hyper-confidence that makes even blatantly wrong hallucinations sound correct, and sometimes debugging the generated code takes longer than writing it the old-fashioned way.

It’s important not to fall into the trap of “developer velocity” at the sacrifice of deep understanding at a more measurable rate. That said, with intentional practices, using AI tools to learn and save time offers incredible benefits to developers looking to grow their careers.

New to Honeycomb? Try Query Assistant and BubbleUp today.

Want to know more?

Talk to our team to arrange a custom demo or for help finding the right plan.