New to Honeycomb? Get your free account today!

Here at Honeycomb, we emphasize that organizations are sociotechnical systems. At a high level, that means that “wet-brained” people and the stuff they do is irreducible to “dry-brained” computations. That cashes out as the inability to ultimately remove or replace people in organizations with computers, in spite of what artificial general intelligence (AGI) ideologues would have you believe. The best that such artifacts can do is “relieve labor-intensive toil,” as my colleagues Charity and Phillip put it.

But I’ve given exactly zero evidence for that position. What justifies the claim that computers can’t replace people, and that organizations only function because of those people?

What follows is an account of a real-world “near-miss” that Honeycomb experienced where I happened to play a role. I want to describe the situation, why technical artifacts alone couldn’t have helped, and then lay out why it took people to explain what happened. If you want a case where I wasn’t involved, I’ve discussed others elsewhere.

A change in Semantic Conventions

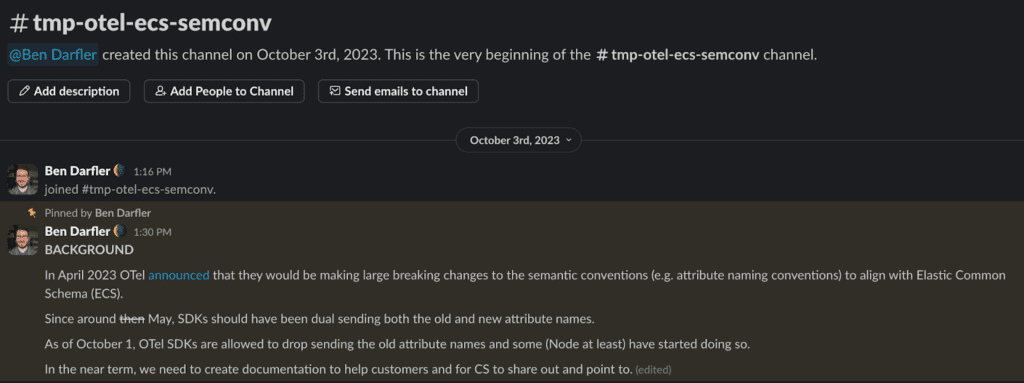

The proximate cause of the near-miss that Honeycomb prevented was changes in OpenTelemetry’s Semantic Conventions, back in April 2023 (for you incident-heads out there, I’ll deconstruct “cause” as I proceed).

From May through September, OpenTelemetry libraries needed to emit both the old and new schema. On October 1st, those libraries no longer needed to send both—and thus a breaking change was permitted.

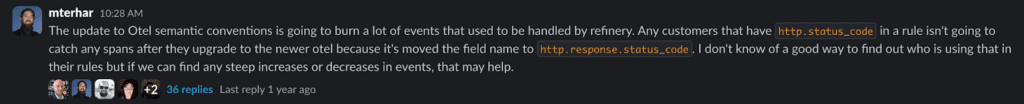

Honeycomb regularly contributes to OpenTelemetry, and several of my colleagues who are involved with the project knew about all this. However, lots of people within Honeycomb didn’t know about this. On October 3rd, Mike Terhar posted in the customer success team Slack channel about a problem he foresaw with this change:

The problem he foresaw regards our Refinery sampling proxy: the config file used to set the sampling rules requires hardcoding specific attribute names. Our customers could use the OpenTelemetry libraries and their Semantic Conventions, then upgrade to the library versions with breaking changes. In that case, they would also need to update their Refinery sampling rules to account for the change to the attribute names; if they didn’t, Refinery wouldn’t know to look for the new names and wouldn’t sample the newly-named attributes in the same way! That would mean that the amount of events they were sending to Honeycomb could increase a lot, and then we could charge them for all those events.

We didn’t want that to happen because that would be a poor customer experience.

Worker bees getting to work

We sprang into action. Members of the customer success team immediately engaged our colleagues in engineering to ask if they knew if any of the libraries had in fact upgraded to stop sending the old names. They started a temporary channel to corral discussion.

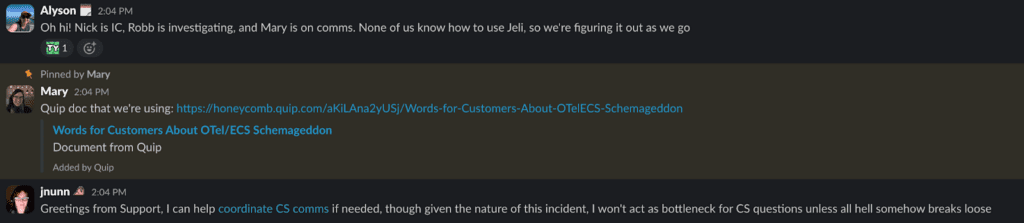

It turned out that engineering didn’t know, so we figured we needed to investigate. There was also some debate about whether this was an “incident.” From an engineering perspective, the answer might seem no—but we in customer success were quite alarmed by the situation and thought it did merit that distinction. I cut the Gordian knot and declared an incident using the Jeli bot in Slack.

The challenge we faced was that we didn’t know if there was even a problem yet, or if we were just anticipating it.

- Had any of the libraries actually upgraded to the breaking change? They were permitted to do so on October 1st, but that didn’t mean that they actually had.

- Did our customers know about the Semantic Convention changes, or would they be blindsided? If customers were affected, which ones were they, and how were they impacted?

Members of customer success, engineering, and product (specifically docs) joined forces to look at the latest versions of the OpenTelemetry libraries and draft communications to customers informing them of the changes.

My fellow technical customer success managers and I also sent preliminary warnings to customers to check their library versions, just in case they had unwittingly accepted the breaking change.

After a quick look at the latest versions of each language library in GitHub, we determined that none of the languages had yet issued upgrades with the breaking change. What a relief!

Moving beyond “cause” and blame

However, we also saw that the OpenTelemetry project hadn’t made any sort of announcement close to October 1st, or on that date to inform users that this breaking change was now permitted, nor were the maintainers of the various libraries required to do more than make a comment in their release notes. This is where one can question the idea that OpenTelemetry’s change was the “cause.” A gloss of the situation may make it seem that OTel is at fault here; however, digging deeper finds other contributing factors.

There’s little research about the rate at which engineers read release notes on the code libraries that they use, but it’s not a stretch to imagine that not everyone reads those. Engineers are also known to use tools like Dependabot to automatically generate PRs when their dependencies announce a new version because it’s hard to keep track of all of them manually. Whether release notes get read or each of those PRs gets a thorough review is subject to the production pressure that engineers face in their workplaces. Given these other factors, saying that the Semantic Convention change is all OpenTelemetry’s fault is incorrect and misleading.

A more correct and pragmatic approach is to use opportunities like this for their revelatory power. Studying what normal working conditions are like and how work processes are actually accomplished grants the opportunity for those doing the work to update their prior understandings, to make collective decisions about how to proceed, and to take all that collective processing back to their individual work. This is what we do at Honeycomb.

Solving this issue required humans

Our solution to this predicament was multifaceted:

- We issued those quick warnings to customers.

- We also drafted a set of announcements intended for a broader audience.

- Longer term, Honeycomb employees who have formal roles in the OpenTelemetry project worked through official channels to request that the project publicize the changes.

- Finally, we continued to practice incident reviews so that teams across Honeycomb learn from incidents and prepare to be unprepared.

Why do we need to do that last step? Well, it’s because technical artifacts like computers (including AI) can’t. Computers can only do what they’ve been programmed to do, and can only do it with the data available. Even AI has limitations based on things like data formats. The requirement of explicit and enumerated parameters is a hard boundary.

People are different. No artifact can disengage from the present and reflect on its situation, asking if it should seek out other data or reinterpret the data it has available. People can. It’s this capacity, according to philosopher Gilbert Simondon, that allows people to serve as transducers of information between technical artifacts. People are necessary for complex technical systems to work together when all of the components weren’t designed to do so. We handle the edge cases. More than that, we can and do invent whole new solutions which make the old artifacts and their edge cases obsolete.

It’s this adaptive capacity that makes organizations work and do the useful things they do. Humans and machines have to work together in a sociotechnical system.