Having more than one static sample rate

If the sampling rate is high, whether due to being dynamically or statically set high, we need to consider that we’ll miss long tail events — for instance, errors or high latency events, because the chance that a 99.9th percentile outlier will also be chosen for random sampling is slim. Likewise, we may want to have at least some data for each of our distinct customers rather than have the high-volume customers drown out the low-volume customers.

So, we’d like to sample events by a property of the event itself, such as the return status, latency, endpoint, or a high cardinality field like customer ID. For properties present in the request itself such as endpoint or customer ID, we can perform “head sampling” and make the decision to sample or not at the start of execution and propagate that decision further downstream (e.g. with a “require sampling” header bit) so we get full traces.

But for return status and latency, we know only in retrospect whether they’re interesting outliers; this is “tail sampling”. Downstream services already have independently chosen whether to discard or instrument, so at best we’ll have the outlying downstream spans, but none of the other context. To collect full traces and perform tail sampling, some collector-side logic is required to buffer entire traces and retrospectively decide what to keep. This buffered sampling technique is not feasible entirely from within the instrumented code.

Let’s start varying the sample rates by key. We can sample the baseline non-outlier events at 1 in 1000 and choose to tail sample the errors & slow queries 1:1 or 1:5. This is still vulnerable to spikes of instrumentation cost if we get a spike in the rate of errors. Modifying the original flat sampling code, we get:

var sampleRate = flag.Int("sampleRate", 1000, "Service's sample rate")

var outlierSampleRate = flag.Int("outlierSampleRate", 5, "Outlier sample rate")

func handler(resp http.ResponseWriter, req *http.Request) {

start := time.Now()

i, err := callAnotherService(r)

resp.Write(i)

r := rand.Float64()

if err != nil || time.Since(start) > 500*time.Millisecond {

if r < 1.0 / *outlierSampleRate {

RecordEvent(req, *outlierSampleRate, start, err)

}

} else {

if r < 1.0 / *sampleRate {

RecordEvent(req, *sampleRate, start, err)

}

}

}So we can support having multiple different sample rates. But how does this work with Target Rate Sampling?

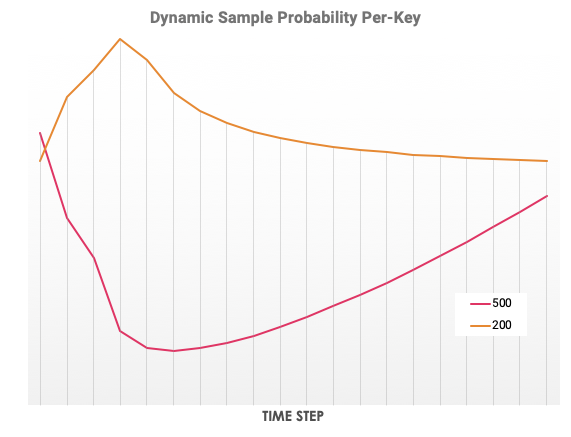

Sampling by key and target rate

Putting the two techniques together: let’s extend what we’ve already done to target specific rates of instrumentation: if a request is anomalous (has latency above 500ms or is an error), let’s choose it for tail sampling at its own guaranteed rate, while ratelimiting the other requests to fit within a budget of instrumented requests per second as per before:

var targetEventsPerSec = flag.Int("targetEventsPerSec", 4, "The target number of ordinary requests per second to sample from this service.")

var outlierEventsPerSec = flag.Int("outlierEventsPerSec", 1, "The target number of outlier requests per second to sample from this service.")

var sampleRate float64 = 1.0

var requestsInPastMinute *int

var outlierSampleRate float64 = 1.0

var outliersInPastMinute *int

func main() {

// Initialize counters.

rc := 0

requestsInPastMinute = &rc

oc := 0

outliersInPastMinute = &oc

go func() {

for {

time.Sleep(time.Minute)

newSampleRate = *requestsInPastMinute / (60 * *targetEventsPerSec)

if newSampleRate < 1 {

sampleRate = 1.0

} else {

sampleRate = newSampleRate

}

newRequestCounter := 0

requestsInPastMinute = &newRequestCounter

newOutlierRate = outliersInPastMinute / (60 * *outlierEventsPerSec)

if newOutlierRate < 1 {

outlierSampleRate = 1.0

} else {

outlierSampleRate = newOutlierRate

}

newOutlierCounter := 0

outliersInPastMinute = &newOutlierCounter

}

}()

http.Handle("/", handler)

[...]

}

func handler(resp http.ResponseWriter, req *http.Request) {

var r float64

if r, err := floatFromHexBytes(req.Header.Get("Sampling-ID")); err != nil {

r = rand.Float64()

}

start := time.Now()

i, err := callAnotherService(r)

resp.Write(i)

if err != nil || time.Since(start) > 500*time.Millisecond {

*outliersInPastMinute++

if r < 1.0 / outlierSampleRate {

RecordEvent(req, outlierSampleRate, start, err)

}

} else {

*requestsInPastMinute++

if r < 1.0 / sampleRate {

RecordEvent(req, sampleRate, start, err)

}

}

}Whew. That has a number of awkward cut-pastes, so we probably shouldn’t paste again to support a third category. Instead, we need to support arbitrarily many keys.