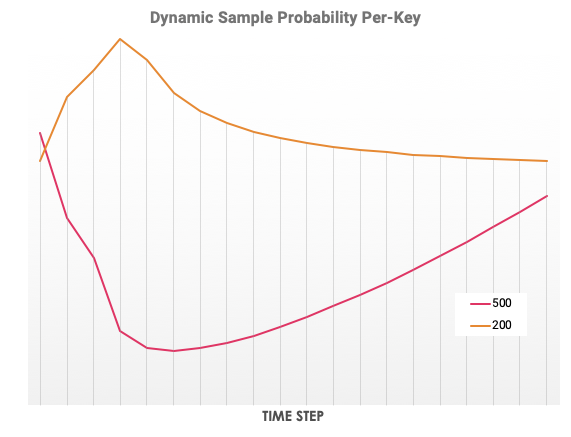

Sampling with dynamic rates on arbitrarily many keys

What if we can’t predict a finite set of request quotas we want to set — e.g. if we want to cover the customer id case above? It was ugly enough to set the target rates by hand for each key (“error/latency” vs. “normal”), and incurred a lot of duplicate code. We can refactor to instead use a map for each key’s target rate and number of seen events, and do lookups to make sampling decisions. And this is how we get to what’s implemented in the dynsample-go library, which maintains a map over any number of sampling keys and allocates a fair share to each key as long as it’s novel. It looks something like this:

var counts map[SampleKey]int

var sampleRates map[SampleKey]float64

var targetRates map[SampleKey]int

func neverSample(k SampleKey) bool {

// Left to your imagination. Could be a situation where we know request is a keepalive we never want to record, etc.

return false

}

// Boilerplate main() and goroutine init to overwrite maps and roll them over every interval goes here.

type SampleKey struct {

ErrMsg string

BackendShard int

LatencyBucket int

}

// This might compute for each k: newRate[k] = counts[k] / (interval * targetRates[k]), for instance.

// The dynsample library has more advanced techniques of computing sampleRates based on targetRates, or even without explicit targetRates.

func checkSampleRate(resp http.ResponseWriter, start time.Time, err error, sr map[interface{}]float64, c map[interface{}]int) float64 {

msg := ""

if err != nil {

msg = err.Error()

}

roundedLatency := 100 *(time.Since(start) / (100*time.Millisecond))

k := SampleKey {

ErrMsg: msg,

BackendShard: resp.Header().Get("Backend-Shard"),

LatencyBucket: roundedLatency,

}

if neverSample(k) {

return -1.0

}

c[k]++

if r, ok := sr[k]; ok {

return r

} else {

return 1.0

}

}

func handler(resp http.ResponseWriter, req *http.Request) {

var r float64

if r, err := floatFromHexBytes(req.Header.Get("Sampling-ID")); err != nil {

r = rand.Float64()

}

start := time.Now()

i, err := callAnotherService(r)

resp.Write(i)

sampleRate := checkSampleRate(resp, start, err, sampleRates, counts)

if sampleRate > 0 && r < 1.0 / sampleRate {

RecordEvent(req, sampleRate, start, err)

}

}We’re close to having everything put together. But let’s make one last improvement by combining the tail-based sampling we’ve done so far with head-based sampling that can request tracing of everything downstream.