Monitoring Windows Servers With the OpenTelemetry Collector

The OpenTelemetry Collector is an exceptional solution for proxying and enhancing telemetry, but it’s also great for generating telemetry from machines too. In this post, we’ll go through a basic, opinionated setup of using the OpenTelemetry Collector to extract metrics and logs from a Windows server.

By: Martin Thwaites

The Director’s Guide to Observability: Leveraging OpenTelemetry in Complex Systems

Learn MoreThis post was written by Martin Thwaites and Vivian Lobo.

The OpenTelemetry Collector is an exceptional solution for proxying and enhancing telemetry, but it’s also great for generating telemetry from machines too. In this post, we’ll go through a basic, opinionated setup of using the OpenTelemetry Collector to extract metrics and logs from a Windows server.

What is the OpenTelemetry Collector?

I’ve waxed poetic about the Collector many times so I won’t give too long of an overview. At a very high level, the Collector allows you to generate, receive, transform, enrich, and export telemetry data—and does so in a vendor-agnostic way (no proprietary agents!) so that if you change observability backends, you don’t need to instrument everything again.

You can read more about the Collector here: Ask Miss O11y: Is the OpenTelemetry Collector Useful?

Installing the Collector

The Collector is distributed as a binary, but for Windows, there’s also an installer called an MSI. The MSI will install the Collector binaries in the right places, set up permissions, and also install it as a Windows service. You can download the latest version of the MSI from the GitHub page.

Run the MSI like any other installer, follow the prompts, and you’ll have a default installation of the Collector installed into C:\Program Files\OpenTelemetry Collector. At this point, the Collector won’t really do much at all—we need to configure it to gather metrics and event logs.

Adding metrics and logs

Now that you have a Collector running, you need to update its config to tell the service to pull in metrics from the Windows host, and also Event Viewer logs.

The config file (assuming you’ve kept the defaults) will be at C:\Program Files\OpenTelemetry Collector\otel-config.yaml. You’ll need to open this in a text editor with administrator privileges.

We’re going to add three new receivers. The first is the windowseventlog receiver for our logs, the second is the hostmetrics receiver for our metrics, and the third is the windowsperfcounters receiver for some additional metrics.

We’ll talk about the reasons for the two metrics receivers below.

Windows Event Logging

This receiver allows you to generate OpenTelemetry logs from Windows Event Logging. To do this, you need to choose a channel from your Windows Event Log. Most commonly, you use Application and System. You’ll need to provide a separate configuration for each channel you want to ingest.

receivers:

// other receivers

windowseventlog/application:

channel: application

windowseventlog/system:

channel: systemWe’re giving these receivers a name by adding /application and /system to the end of their definition as it will make it easier to see them when we add them to the pipelines. I advise that you also do this for every component you add to your Collector and not rely on the type name alone.

Host metrics

This is the receiver that can take our CPU, memory, disk, and network statistics and generate OTLP metrics signal data from them. We only need a single hostmetrics receiver as we can tell it which metrics data we want to scrape from the Windows system APIs.

hostmetrics/all:

collection_interval: 30s

scrapers:

memory:

network:

filesystem:You’ll notice here that I’m excluding cpu from the scrapers section. This is because there is currently a bug with the host metrics receiver on Windows that results in negative CPU values in some circumstances, so we’ll use the Windows Performance Counters receiver for that.

Windows performance counters

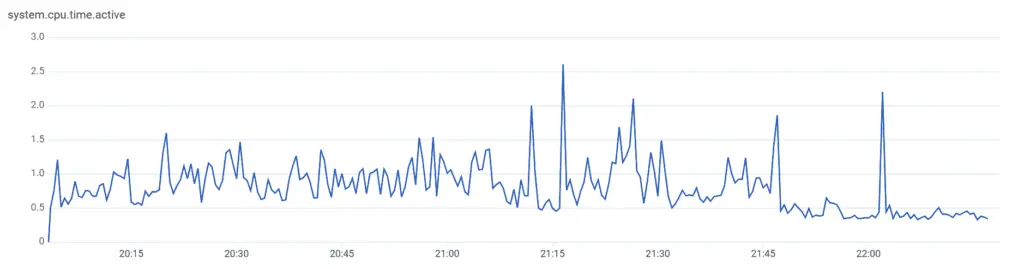

Since there is an issue with using the hostmetrics receiver for CPU stats, we have another mechanism for getting information about the CPU, and potentially other information too.

windowsperfcounters/processor:

collection_interval: 30s

metrics:

system.cpu.time:

description: percentage of cpu time

unit: "%"

gauge:

perfcounters:

- object: "Processor"

instances: "_Total"

counters:

- name: "% Processor Time"

metric: system.cpu.time

attributes:

state: active

- name: "% Idle Time"

metric: system.cpu.time

attributes:

state: idleYou’ll notice that we’re pulling two different items and adding them to a single metric with attributes. This allows multiple dimensions over a single metric. In Honeycomb, we’ll group these in a special way to ensure that it doesn’t cost a lot to store them.

The important thing in this snippet is the instances part is set to _Total. You can set this to ”*” to get information about each individual CPU, but in my opinion, that isn’t worthwhile from an observability standpoint.

There are tons of performance counters in Windows, and you can use this receiver to query them all. You can get a list by typing typeperf -q {object}. Some examples of {object} here are Processor, Memory, PhysicalDisk, LogicalDisk. Alternatively, if you want a big list of all of them, you can use this powershell command:

Get-Counter -ListSet * | Sort-Object -Property CounterSetName | Format-Table CounterSetName, Paths -AutoSizeCollection intervals

With each of the components, you can control the collection_interval. This is how often the Collector will poll that resource to get the information. The amount of times you poll will directly correlate to how much it costs you to store the data in pretty much all platforms, including Honeycomb. Tuning this is your main lever to be able to control metrics costs.

My recommendation here is to not try to get very granular with this. It may seem like a one second poll would be valuable, but ultimately, if you’re hitting a problem on a server, you’ll catch CPU or memory spikes over a one minute period. The examples above use 30 seconds, which I believe is a nice compromise—but you can change these values as you see fit.

Exporter configuration

You’ll then need to configure your exporters. In Windows, we don’t have an (easy) inbuilt ability to configure the environment variables for each service. Therefore, I recommend hardcoding these values into the config, but changing the permissions on the file to only be able to be accessed by the user running your Collector service. There are lots of articles about securing credentials for Windows services, so I’m not going to cover that part.

We’ll use two exporters here: one for logs and one for metrics. This is so that we can add them to different datasets in Honeycomb, and therefore be able to query them better.

exporter:

otlp/metrics:

endpoint: api.honeycomb.io:443

headers:

x-honeycomb-team: {my-ingest-key}

x-honeycomb-dataset: "server-metrics"

otlp/logs:

endpoint: api.honeycomb.io:443

headers:

x-honeycomb-team: {my-ingest-key}

x-honeycomb-dataset: "server-logs"Resource Detection processor

Finally, we need the information about the server we’re running on to make it into the telemetry data so we can query this information. To do this, we use something called the Resource Detection processor. This has a few modes depending on where your Windows server is running. If you’re running in EC2, an Azure VM, or instead a Kubernetes cluster, there are specific detectors that can bring in the tags or labels from those services. That’s out of the scope here, but take a look at the linked documentation for the processor to work out how to use that.

For this post, we’ll assume this is a bare metal instance and use the system detector.

resourcedetection/system:

detectors: ["system"]

system:

hostname_sources: ["os", "dns", "cname", "lookup"]Pipelines

The final thing to do is set up the pipelines to link all of this together.

service:

pipelines:

metrics:

receivers:

- hostmetrics/all

- windowsperfcounters/processor

processors:

- resourcedetection/system

- batch

exporters:

- otlp/metrics

logs:

receivers:

- windowseventlog/application

- windowseventlog/system

processors:

- resourcedetection/system

- batch

exporters:

- otlp/logs

Troubleshooting

Each time you change the config, you’ll need to restart the Windows service. If this fails, the logs are in the Windows Event Viewer under the Application tab. There is no difference to how this works on Windows, so you should be able to follow all the troubleshooting steps that are available in the OpenTelemetry documentation.

Conclusion

Setting up the Collector on Windows is simple, but not without nuance. The data you get is interesting and valuable when you’re using this remotely to monitor servers or client machines you don’t have physical access to.

Read the series on OpenTelemetry best practices.

Want to know more?

Talk to our team to arrange a custom demo or for help finding the right plan.