Observability, Telemetry, and Monitoring: Learn About the Differences

Over the past five years, software and systems have become increasingly complex and challenging for teams to understand. A challenging macroeconomic environment, the rise of generative AI, and further advancements in cloud computing compound the problems faced by many organizations. Simply understanding what’s broken is difficult enough, but trying to do so while balancing the need to constantly innovate and ship makes the problem worse. Your end users have options, and if your software systems are unreliable, they’ll choose a different one.

By: Rox Williams

Over the past five years, software and systems have become increasingly complex and challenging for teams to understand. A challenging macroeconomic environment, the rise of generative AI, and further advancements in cloud computing compound the problems faced by many organizations. Simply understanding what’s broken is difficult enough, but trying to do so while balancing the need to constantly innovate and ship makes the problem worse. Your end users have options, and if your software systems are unreliable, they’ll choose a different one.

Trying to understand the difference between observability, telemetry, and monitoring can be a big ask. However, learning this distinction will help you in evaluating your options and cutting through the confusion in the observability landscape.

Observability vs. telemetry vs. monitoring

Are these all the same? No, they are not. It’s easy to get confused, though, since they’re all linked to each other. Observability is a continuous process of analysis, telemetry is the data that feeds into that analysis, and monitoring is a specific task in the observability process. Let’s go through some quick definitions before we dive into the details.

Observability

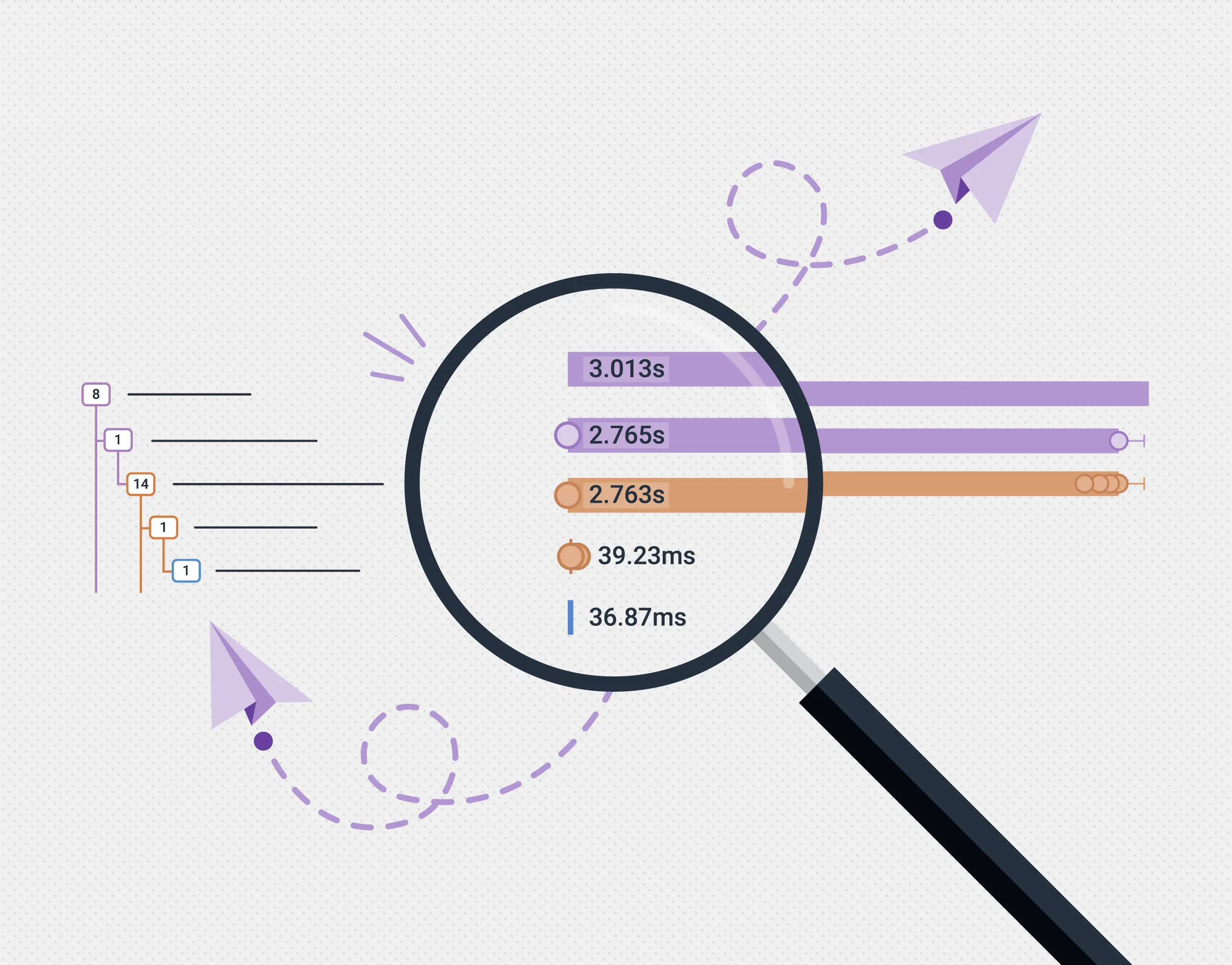

Observability is a continuous process of analyzing and understanding software systems. It relies on telemetry data (such as logs, metrics, traces, profiles, and events) and analysis tools, such as Honeycomb. Observability workflows are how you use these tools and data to solve problems in production, understand the customer experience, and discover the contributing factors to an incident.

Telemetry

Telemetry is a type of data generated by systems. You’re probably familiar with the most common types already—application logs and system metrics. Telemetry is not limited to these signals, though! Systems can generate a variety of structured or unstructured events, like traces that show the relationship between services in a request, or user interactions with a web application.

Monitoring

Monitoring is the act of actively or passively paying attention to some observability signal. This can be as straightforward as setting up an alert that tells you when your site is offline, to as complex as an org-wide dashboard that consolidates many measurements of business and system performance into a variety of Service Level Objectives (SLOs).

What is observability?

Observability is a process of understanding what’s going on in your system, and how it relates to your organization, business, or team. It’s the result of a combination of many factors:

- Your ability to ingest and query over wide quantities of structured data

- The ability for your systems and applications to emit a wide variety of semantically accurate telemetry

- Your organization’s ability to respond to and manage change

It’s a big topic, and it’s no surprise that there are many different definitions.

Often, you’ll hear observability referred to as having three pillars: logs, metrics, and traces. While it is true that observability relies on a variety of telemetry signals, telemetry is not observability. Having this data is necessary, but not sufficient for observability. These signals are important because they’re types of semantic telemetry. They include metadata that helps you understand how they should be used:

- A metric contains information about what it measures and how it’s recorded.

- Traces give you rich context from your application, showing you exactly what resources are used in a transaction.

- Logs, when properly structured, are invaluable at recording events that occur in the operation of your system.

The key value of observability isn’t in the data, it’s what you do with it. As an industry, we have spent too long arguing that observability is simply the ability to parse through gigabytes of JSON in order to find a needle in the haystack. Our customers, and our organizations, need more.

Observability allows you to ask—and more importantly, answer—questions that run the gamut from tactical (“Which database shard is responsible for the current poor performance of this API, and which customers are impacted by it?”) to strategic (“How has our conversion funnel been improved by optimizations that the frontend teams have been making to the signup experience, broken down by device type and feature flag variant?”). It is the ability to take this data and make it valuable to not just how you build software, but how you build a software business.

Want to learn more?

Get a free copy of our O’Reilly book.

What is telemetry data?

Telemetry data is the foundation of observability; without it, you’re literally in the dark. This data is most valuable when it is both structured and semantic. Structured data is another way of saying “the data has a consistent format.” It’s the difference between a log message that looks like this:

> 12:47:01 “error in apiHandler: greebles were insufficiently grobbled”And one that looks like this:

{

“timestamp”: “12:47:01 +0000”,

“message”: “error in apiHandler:,

“type”: “exception”,

“body”: “greebles were insufficiently grobbled”,

“stackTrace”: …

}Semantic telemetry data extends this concept further, by adding schemas that humans or other systems can use to interpret the fields in structured data. The best example of structured, semantic telemetry data comes from the OpenTelemetry project.

This project aims to create a standard for creating and collecting telemetry for software systems and is used by thousands of companies and developers worldwide. It works with most existing telemetry libraries, allowing you to modernize existing data by mapping it into one of its schemas for traces, metrics, or logs.

You can also use it to replace existing, proprietary instrumentation in order to achieve vendor agnosticism for your telemetry data. It’s a great tool to modernize your existing telemetry data and to standardize on for new services.

What is monitoring?

Monitoring is something you do as part of your observability practice, usually with the aid of observability tooling. This can be anything from watching a dashboard to track the status of a rollout, inspecting the output of your service in order to track down a bug, or keeping tabs on your SLOs to ensure that you’re working on the most important things.

Monitoring can be performed actively, such as when you’re trying to figure out what factors are contributing to an outage or performance regression, or passively, like in our SLO example above.

The key thing to remember is that monitoring isn’t just a synonym for observability. It’s something you do, not something you have. Let’s take a deeper look at the distinction.

Observability vs. monitoring

If you’ve been working in software for a while, you’ve probably noticed that a few years ago there was a shift in how monitoring companies talked about themselves. Almost overnight, they slapped on a fresh coat of paint and rebranded as ‘observability’ tools. This has been a source of confusion to many—what changed? In most cases, not that much! That’s not to say that people are being misleading, it’s that our collective understanding of the distinction between these disciplines has matured.

Traditional monitoring approaches that existing tools support tend to have a few things in common:

- Reactive, interrupt-driven workflows (get paged when something’s going wrong)

- Collecting and processing large amounts of telemetry data, for a price

- Siloed analysis workflows, segregated by signal type (metrics, logs, traces)

This approach leads to a lot of pain for teams and organizations. Your options to deal with these challenges are limited: you’re forced to employ blunt cost controls, slashing resolution and telemetry scope in order to stay in budget. This leads to blind spots in your system and makes it harder to justify the value of your observability practice.

Observability doesn’t have to be this way, though. By moving towards a modern, strategic, and holistic observability practice, you can realize the benefits of total system visibility and better alignment across engineering and the business towards working on what matters most: delighting customers.

How telemetry, observability, and monitoring fits together

In a modern observability practice, semantic telemetry is combined with active and passive monitoring to build a holistic view of not only your software system, but how it impacts your business. Enhancing your system telemetry with customer analytics, code quality metrics, and other organizational metadata unlocks your ability to ask and answer questions you never could before—not just “is the system up?” but “how long does it take for updates to roll out to our user base so we can deprecate legacy systems?” and “which teams are performing best at database migrations, and what can we learn from them?”

In addition, a holistic approach to observability unlocks powerful production debugging and monitoring capabilities. Rather than relying on reactive and noisy alerts, SLOs let you focus on just the changes that matter. You can spot problems before they become fires and leverage artificial intelligence to reallocate resources and notify the appropriate teams for proactive interventions. Instead of staring at potentially outdated and confusing dashboards, you can explore data through interactive and powerful real-time queries, enabling everyone on your team to be a performance engineer.

Choosing an observability platform

There’s a lot of options for observability tools, but few that offer a holistic approach to observability. Traditional “observability 1.0” tools suffer from the problems we identified above: uncontrolled costs, siloed workflows, and difficult value realization, especially at the top end. When evaluating tooling, here are some questions you should consider in order to make the best choices:

- Does the tool support OpenTelemetry? This project is rapidly leading, and consolidating, telemetry creation and processing. Hundreds of libraries support it, with more being added every month, and the project continues to evolve to cover more and more use cases.

- Is the tool based on an analytics-based approach, or does it rely on static dashboards and reactive alerts? Your ability to slice and dice telemetry data across many dimensions is directly tied to your ability to create proactive monitoring and investigation workflows.

- Are you able to customize your storage and sampling needs in order to better control costs? Holistic observability tools will offer many options, from BYOD (bring your own datastore) to hybrid approaches. In addition, how does the tool treat your data? As separate, siloed query experiences, or as a unified stream of correlated telemetry?

- What pricing model does the tool use? Does it count users, hosts, nodes, and containers as a billing measurement? Are you penalized for creating custom attributes or metrics with unpredictable pricing spikes? Look for tools that offer simple, event- or storage-based pricing (hint: we’re event-based).

Observability with Honeycomb

Honeycomb isn’t just another observability platform. We practically invented the term, and we continue to lead the industry as the first OpenTelemetry-native tool. Our contributions speak for themselves.

It might seem like a challenge to make the switch from traditional observability tools to this strategic approach, but with Honeycomb, you’re in good company.

Need proof? Meet Pax8, who chose Honeycomb because of its observability 2.0 approach.

Our sales and customer success teams have helped hundreds of teams just like yours make the switch. Learn more by booking a demo today.

Want to know more?

Talk to our team to arrange a custom demo or for help finding the right plan.